I love testing. Testing software is one of the greatest skills I've learned so far as a software engineer. This has, in part, lead to a great affection for test-driven development (TDD) and in particular the art of outside-in TDD. I'm going to focus on using Ruby on Rails and the testing frameworks RSpec and Capybara. Let's have a look.

What is Outside-In Test-Driven Development?

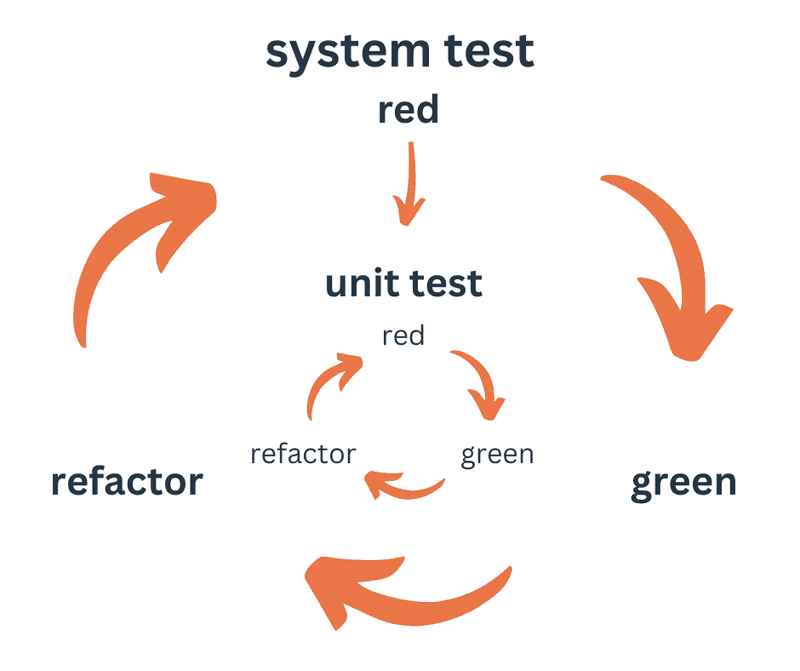

Outside-in test-driven development is the process of starting from the highest level of testing and usage of an application. Put yourself in your user's shoes and ask "what do I want this application to actually do?" Then, write a test that describes that very thing. Outside-in test-driven development makes use of acceptance test-driven development by defining a test from the user's perspective, but then drilling down into the nitty gritty details, test-driving the deep implementation, before bubbling back up the acceptance test level to fully implement the core functionality.

Behaviour-driven development is a focus on the end user. Testing the end user's flow is a wonderful facet to add to a comprehensive test suite, but it doesn't describe how to test and then implement the more technical aspects, for example down to the database and controller levels. Outside-in TDD takes behaviour-driven development one step further by using it as a springboard for full application TDD.

Example of Outside-in Test-Driven Development

Take a look at the diagram below. We start on the outside with the system test, which is the first code we write in the application. Of course it's red. Then we'll take some steps towards the inside, where we find unit (or integration) tests. We'll follow the cycle from red to green to refactor with the inner tests. That cycle can go around once or a few times or many many times before we get to jump back out to the system test. When the system test is finally green, we can do a wider refactoring and then we're done... at least with that cycle, then the next cycle starts.

Let's take a classic Todo List application as an example. Forgetting about things like authentication for the time being, let's just imagine a user comes to the application. What do they want to do? They want to add items to their todo list and probably mark them as complete, edit them, delete them and so on. Let's build our first feature, then.

Our First Feature

We start with a high-level system test that goes something like this:

- The user enters their todo item into the todo item input field, let's call it "Buy milk".

- The user clicks the submit button labelled "Add todo".

- The user expects to see their todo item "Buy milk" in the list of todos.

Nice, we have our first test. In the process of making this test pass in our Ruby on Rails application, we'll do things like adding a route into our routes file, adding a todos controller, adding some views, adding a todo model, adding the todos table in the database via a migration and adding some creation logic. Each step of the way, we'll slow it right now, put our system test on the back burner for a little while, focus on the inner unit or integration test cycle and test-drive the inner implementations. Red unit test, followed by a green unit test, and then refactor the unit under test. We'll have a simple controller test to check that it receives the parameters and creates a new todo item out of the text given. Before we can make that pass, we'll need to move inwards again and write a model test before adding the model, then make that pass and then maybe refactor it. (Models tend to be pretty straight-forward, so we might skip the refactoring there). Only then can we come back up the chain to the controller test, which can now be turned green. Somewhere in the mix, we might want to add a view test and then make that pass, before refactoring the view.

Finally, after a few journeys around the red-green-refactor cycle, we have some nice, green unit and integrations tests and, most importantly, a green system test! Our users can now add items to the todo list, amazing!

But wait, a good todo list application won't let us submit an empty item, surely! I think our users would be irritated it they accidentally hit that submit button before typing any todo item and it actually added a blank item to their todo list. What are they supposed to do with a blank todo?! That brings us onto our second system test then.

Our Second Feature

- The user leaves the todo item input field blank.

- The user clicks the submit button labelled "Add todo".

- The user sees a warning message that the todo item is blank.

- No item is added to the list of todos.

After writing our second system test, which will start being red, we'll figure that we need a controller test to check that the correct response is being returned when the todo item is invalid. Before we can make that pass, we'll add a model test that validates the todo item text is not blank. Then, once that's passing, we'll bubble up to the controller level and make that test pass so that we're sure the correct error message is being returned. Finally, we might just need some final touches to display our error message to the user and the system test should be green as well!

Alright, our user should be happy now, they can create todo items that are added to their list, but we won't let them pollute their beautiful list with blank items. Hang on, though. What if their list gets so long that they forget they already put something on there? We don't want them to buy twice as much milk as they need! It would spoil! We need to figure out a way to prevent this potential catastrophe. For this, we'll need our third system test.

Our Third Feature

Let's add a new system test to check for duplicate items. We could try to catch for alternative spellings and such, but that would be quite cumbersome. For now, let's make sure our user doesn't have the same exact item twice in the list. Again, we'll do some checks before saving the newly created item and show an error when it's a duplicate of an existing todo item.

- The user already has a todo item called "Buy milk" (test setup).

- The user enters a new todo in the input field, which is also "Buy milk".

- The user clicks the submit button labelled "Add todo".

- The user sees a warning message that the todo item already exists.

- No item is added to the list of todos, but "Buy milk" is still there from before.

Right, this is pretty clear. Let's get to it. The third system test is red and we want to go down into our controller again to add the logic. Now we're checking that the record is invalid, like before, but for a different reason. We'll add a controller test, but can't make it pass yet. Down we go again into the model. This is where our duplicate todo item logic will live, so we'll add in a test at the model level, make it pass, and tidy up with a bit of refactoring. This alone might be enough to make our controller test pass. And the system test too! Wow, that was efficient.

However, something slightly irks me about that. If we didn't actually make any changes to our controller test, then maybe that test isn't necessary. After all, the whole point of test-driven development is to write a test that drives a change in the implementation, which this does not. The same goes for the system test. At the end of the day, the third system test is quite similar to the second. If anything bad happens, we show a warning, and the todo item is not added. Perhaps we don't need that third system test then. System tests take longer to load in Ruby on Rails because they need to load a lot of stuff compared to unit and integration tests. Let's delete it.

The controller test is in a similar position. If anything bad happens when saving the record, we just return the error and don't save the record. So let's get rid of the latest controller test too! It's only an integration test, meaning it's faster than a Rails system test, but Rails controller tests are still slower than a Rails unit test and it isn't really checking much here, so let's delete that one too.

Outside-in TDD has resulted in a whole bunch of tests being written here, only for most of them to be deleted immediately. But that's ok, we made it super simple to find the place where our tests needed to go (in the model) and got rid of any extraneous testing (in the controller test suite and the system test suite). We didn't need to, of course, but what's the point in over-testing the application? There will be many tests added to these test suites in the rest of the project, so it's best to keep them lean as long as we can keep the coverage high. Ruby on Rails test suites can really quickly become bloated and slow, so cutting out redundant tests can help with development speed, especially when striving for Continuous Integration and Continuous Deployment.

Wrapping Up

We've seen in this post how simple it can be to test-drive entire features in our Ruby on Rails applications by using this simple but ultra effective outside-in test-driven development starting with acceptance test-driven development. Not only does a test-first approach alleviate the classic blank page syndrome that software developers can fall foul of when starting new applications or even just new features, but, in my opinion, it gives us something far more valuable - direction. When starting a new application, it's easy to look at a project plan and say "OK, I know I'll need this model and that model and X controller and Y controller and a whole bunch of routes", but before you know it, you've got a lot of code that does precisely nothing of value.

By following an outside-in TDD approach, you first highlight the value you want to add, then you work directly towards implementing it.

At times, it leaves you with some gaping holes in functionality, but by constantly viewing the application from the highest possible level, it's trivial to get clarity on what's missing and what the next most important feature should be.

I purposefully didn't include any code snippets here, but if you enjoyed this post and would like to see some practical, hands-on examples that you can follow for yourself, please get in touch with me and I'll add them to this post. Alteratively, if you think you'd benefit from software coaching, you can schedule a free first session with me here on my coaching page.

If you've got this far, firstly, thanks for reading; secondly, I have one small favour to ask of you: please share this post on your LinkedIn or Twitter account. If that's too much to ask, please follow me on LinkedIn to keep reading my material about software engineering. I'd love to hear from you in the comments or via direct message. The link is in the footer below.